I was staring at my calculus homework. Problem 7: Find dy/dx if y = (3x² + 5)⁴.

I had no idea where to start. The lecture moved too fast. The textbook explanation made no sense. I’d already wasted 30 minutes getting nowhere.

So I did what every student does now: I opened ChatGPT.

“Solve this for me: Find dy/dx if y = (3x² + 5)⁴”

ChatGPT gave me the full solution. Step by step. The answer was dy/dx = 4(3x² + 5)³(6x).

I copied it. Moved to the next problem. Did the same thing. Finished my homework in 20 minutes. Submitted it. Got full points.

I felt smart.

Test day came.

Same type of problem. Different numbers. No ChatGPT to help me.

I stared at it. I had no idea what to do.

I failed.

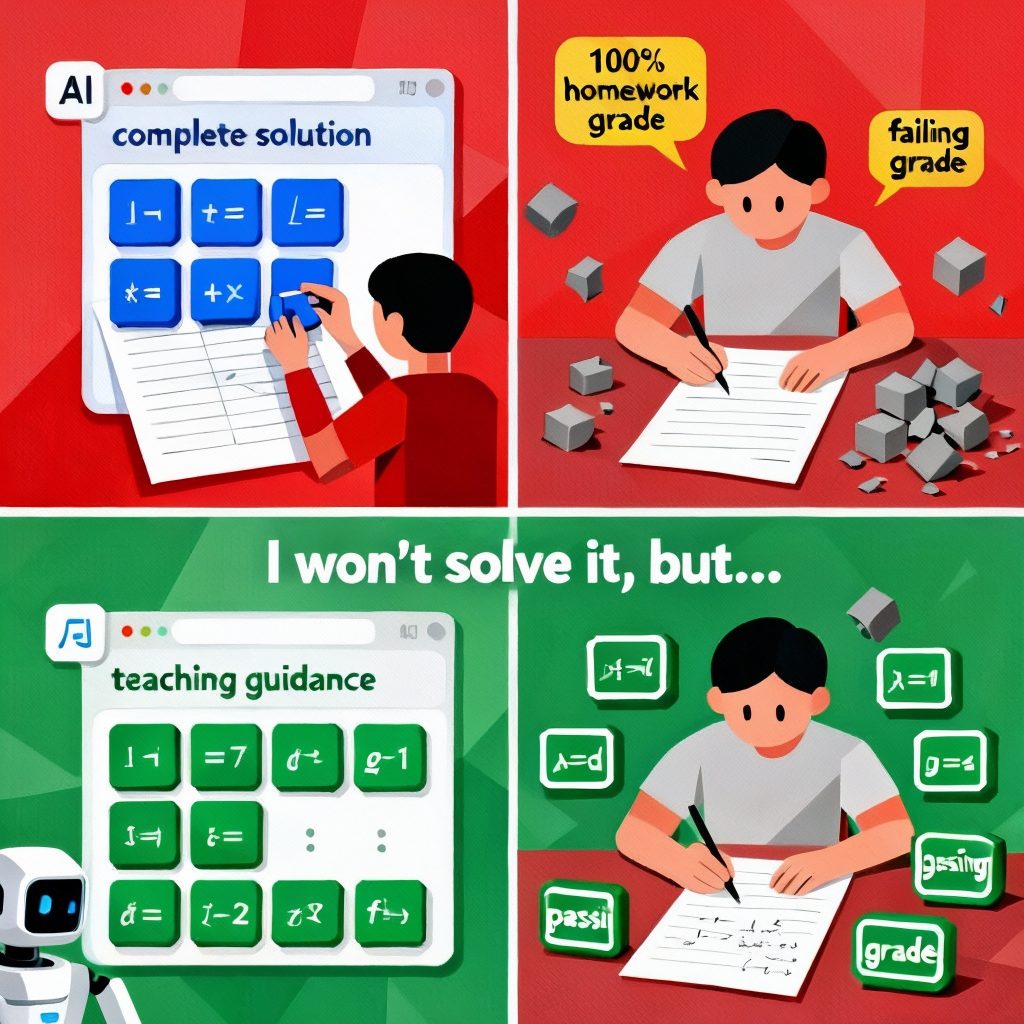

The Problem With Getting Answers

Here’s what I did wrong: I got the answer without understanding the concept.

ChatGPT showed me the steps. I copied them. But I never learned WHY those steps worked or WHEN to use them.

So when the test came with a slightly different problem, I was lost. Because I’d never actually learned how to do derivatives. I’d just learned how to copy answers.

And I wasn’t the only one. Half my class did the same thing. We all had perfect homework scores. We all bombed the test.

The teacher noticed. Started making tests way harder to compensate for AI. Made the class even worse for everyone.

I realized: Using AI to get answers was making me dumber, not smarter.

What I Tried Instead

After failing that test, I was desperate. I had another calculus test coming up in two weeks. I needed to actually learn this stuff.

So I tried something different.

Next time I got stuck – this time it was y = sin(2x³) – instead of asking ChatGPT to solve it, I asked:

“I don’t understand how to find the derivative of this. Can you explain what I need to know?”

Here’s what happened:

ChatGPT: “Okay, this problem needs something called the chain rule. Have you heard of it?”

Me: “Yeah but I don’t really get what it is.”

ChatGPT: “Alright, think of it like peeling an onion. Your function has layers – one function wrapped inside another function. The chain rule is how you take the derivative when you have these layers.”

Me: “Okay…”

ChatGPT: “Let me show you with a super simple example first. Way simpler than your problem. Look at this: y = (x + 1)²

Can you see the two layers here?”

Me: “Not really?”

ChatGPT: “The outer layer is ‘something squared.’ The inner layer is ‘x + 1.’ So you have (x + 1) sitting inside the square. Make sense?”

Me: “Oh, okay yeah. The x + 1 is the thing being squared.”

ChatGPT: “Exactly. Now here’s how chain rule works. You peel the onion from outside to inside:

Step 1: Take the derivative of the outer layer, keeping the inside the same. The outer layer is ‘something²’, so its derivative is 2(something)¹ = 2(something). Since the inside is (x + 1), we get: 2(x + 1)

Step 2: Multiply that by the derivative of the inner layer. The inner layer is (x + 1), and its derivative is just 1.

Step 3: Multiply them together: 2(x + 1) × 1 = 2(x + 1)

That’s your answer. You worked from outside layer to inside layer, then multiplied.”

Me: “Wait, so for any problem like this, I do outside first then inside?”

ChatGPT: “Yes! Outside layer derivative, then multiply by inside layer derivative. That’s the chain rule.”

Me: “Okay that actually makes sense.”

ChatGPT: “Good. Now try your problem: y = sin(2x³)

First question: what’s the outer layer and what’s the inner layer?”

Me: “Um… the outer layer is the sin?”

ChatGPT: “Right! The outer function is sin(something). And the inner layer?”

Me: “The 2x³?”

ChatGPT: “Exactly. You have 2x³ sitting inside the sine function. Now use chain rule. What’s the derivative of the outer layer – sin(something)?”

Me: “cos(something)?”

ChatGPT: “Yes! So you get cos(2x³) – you keep the inside the same for now. What’s next?”

Me: “Multiply by the derivative of the inside?”

ChatGPT: “Right. What’s the derivative of 2x³?”

Me: “6x²”

ChatGPT: “Perfect. So multiply them together. What do you get?”

Me: “cos(2x³) × 6x² ?”

ChatGPT: “That’s it! You just used chain rule. The answer is 6x²cos(2x³).”

Me: “Wait, that’s it? I actually got it?”

ChatGPT: “You did. Now try the next problem on your homework yourself and see if you can do it without asking me.”

I looked at the next problem: y = (4x – 3)⁵

Okay. Outer layer is something to the 5th power. Inner layer is 4x – 3.

Derivative of outer: 5(something)⁴ = 5(4x – 3)⁴

Derivative of inner: 4

Multiply them: 5(4x – 3)⁴ × 4 = 20(4x – 3)⁴

I checked with ChatGPT. I got it right.

Holy shit, I actually understood this.

The Real Test (Again)

Two weeks later. Next calculus test.

This time, when I saw derivative problems with functions inside other functions, I could actually do them.

Not perfectly – I still made some mistakes. But I knew what to look for. I could identify the layers. I understood the logic of working outside to inside.

I got a B.

Not amazing, but compared to failing? Huge improvement.

And more importantly: I didn’t forget everything after the test. When chain rule came up again on the final, I still remembered it. Because I actually understood how it worked.

What I Learned About Math

Math isn’t about memorizing formulas or copying steps.

It’s about understanding the logic of WHY methods work.

Here’s what I noticed:

When I used AI to GET ANSWERS:

- Homework: easy, high scores

- Understanding: zero

- Tests: failed every time

- Long-term: remembered nothing

When I used AI to UNDERSTAND CONCEPTS:

- Homework: took longer, had to think

- Understanding: actually got it

- Tests: could solve problems independently

- Long-term: remembered because I understood

The second way is harder in the moment. But it’s the only one that actually works.

The Pattern That Works

After that experience, I used this approach for everything in math:

Instead of: “Solve this problem”

Ask: “I don’t understand this concept. Can you explain it?”

Then AI would:

- Use an analogy to explain the concept (onion layers, etc.)

- Show me a SIMPLE example first and work through it completely

- Have me apply the same logic to my harder problem

- Check my work and point out mistakes in my logic (not just give the answer)

This worked for everything:

Quadratic formula: AI explained it with a simple example (x² – 5x + 6 = 0), showed me how to identify a, b, c, walked me through the formula. Then I applied it to my harder problem.

Trig identities: AI explained the relationship between sin and cos using the unit circle analogy. Then showed simple identity, then had me work through harder ones using same logic.

Integrals: AI explained “integral = area under curve” with visual description. Worked through simple rectangle example. Then I applied to my actual problems.

Same principle every time: Analogy + simple example worked completely + apply to my problem = I actually understand.

The Golden Rule I Follow Now

Here’s how I know if I actually understand something:

Can I do a similar problem without AI?

If the test gave me a different chain rule problem right now, could I solve it? If yes, I learned it. If no, I just copied steps.

For chain rule:

- I can identify when I need it (function inside another function)

- I can explain the logic (outside layer first, then inside, then multiply)

- I can do new problems using the same method

When I can do that, I don’t need to memorize. I understand the pattern.

That’s when math stops being impossible and starts being something you can actually do.

Why I Built a Tool That Won’t Give You Answers

After using this approach for a semester, my grades went from Ds to Bs. Sometimes As.

But here’s the problem: It’s really easy to slip back into asking for answers. Especially when you’re tired or stressed or just want homework done.

I’d catch myself typing “solve this for me” and have to force myself to rephrase it.

That’s why I built something that stops me automatically.

The SuperPrompt is designed to refuse when you ask for answers:

“You’re asking me to solve this for you. Let me explain the concept instead, then you solve it yourself.”

Then it does what ChatGPT did in that conversation:

- Uses analogies and simple language

- Shows you a simple example worked completely

- Makes you apply it to your problem

- Points out where your logic breaks down (not just gives the answer)

It’s like having the version of ChatGPT that makes you learn instead of making you dependent.

And it works with any AI you use – ChatGPT, Claude, whatever. Same prompt, same approach.

The complete eBook shows this system for every subject – not just math. How to use AI to understand instead of getting answers, how to build real competence, how to avoid the mistakes that make AI hurt instead of help.

Both designed for one thing: help you get better at hard subjects for real, not just fake your way through homework.

If You’re Struggling With Math

The problem might not be that you’re “not a math person.”

It might be that you’re getting answers instead of understanding concepts.

You’re copying steps instead of learning logic.

That’s fixable.

Next time you’re stuck on math:

Don’t ask AI to solve it.

Ask: “Can you explain this concept with a simple example?”

Let it work through a simple version completely.

Then apply the same logic to your problem yourself.

Do that enough times, and you’ll notice: You’re getting better. Not just at homework, but at actually doing math independently.

That’s what understanding does.

And that’s what makes math stop being terrifying and start being manageable.

Want the system that makes this automatic?

Get the SuperPrompt that refuses to give you answers and walks you through understanding instead.

(Works with ChatGPT, Claude, or any AI you use)

P.S. Next time you’re stuck on math, try this: Ask AI for a simple example first, not the answer to your problem. Work through the simple one, then apply that logic yourself. If you can, you actually learned something.

If you enjoyed this Blog Post, you might also enjoy my other Blogs on Meta Learning ( i.e. learning how to learn ) here: