I wrote a practice essay for my upcoming finals. Felt pretty good about it. Pasted it into ChatGPT and asked: “What should I improve about this essay?”

ChatGPT gave me feedback:

“Your essay could be stronger with:

- More evidence to support your second argument

- Deeper analysis in the third paragraph

- A more specific thesis statement

- Better transitions between ideas”

Okay, useful. I revised my essay following that feedback. Added more evidence. Made my analysis “deeper” (whatever that meant). Made my thesis more specific.

Wrote another practice essay. Asked for feedback again.

ChatGPT: “Much better! Your arguments are stronger now. Nice improvements on the analysis.”

I felt ready. I’d practiced, gotten feedback, made improvements.

Test day came. I wrote my essay using everything I’d practiced.

Got it back: 3.5 out of 10 points.

Wait, what?

I’d followed ChatGPT’s feedback. I’d made the improvements it suggested. I thought I was ready.

Clearly, I wasn’t.

The Problem With AI Feedback

Here’s what I learned the hard way: ChatGPT DOES give you feedback. But it’s generic feedback that doesn’t match what YOUR teacher actually wants.

ChatGPT told me to “add more evidence.” I added more quotes.

My teacher took off points because I didn’t cite the SPECIFIC type of evidence she wanted – primary source documents, not just any quotes.

ChatGPT told me to make my analysis “deeper.” I explained more.

My teacher took off points because “analysis” in her class meant connecting to broader historical themes, not just explaining more details.

ChatGPT told me to make my thesis “more specific.” I narrowed my focus.

My teacher took off points because my thesis didn’t address all three aspects the prompt asked for.

ChatGPT’s idea of a “good essay” didn’t match my teacher’s grading rubric.

So I practiced getting better at what ChatGPT valued. But my teacher graded on different criteria.

The gap between AI’s feedback and my actual grades was making me WORSE, not better. Because I was improving in the wrong directions.

I did this for months:

Practice essay → ChatGPT feedback → make improvements → real test → bad grade

Every time, I thought I was practicing effectively. Every time, I was practicing the wrong things.

The Thing That Changed Everything

Right before finals, my teacher did something unusual: she gave us the actual grading rubric.

Most teachers don’t do this. But she handed out a sheet showing exactly how she’d grade our final essay:

- Thesis statement addressing all aspects of the prompt (20 points)

- Three arguments supported with specific textual evidence (30 points)

- Analysis of historical/thematic significance (30 points)

- Proper structure and citations (10 points)

- Clear writing (10 points)

I stared at this rubric and realized: this is literally the answer key.

If I know EXACTLY what she’s grading on, I can practice hitting EXACTLY those criteria.

But how do I practice when there’s no practice test?

That’s when I had the idea: what if I made AI grade me using THIS rubric instead of its own generic standards?

What I Did Instead

I tried something different for my next practice essay.

Instead of asking ChatGPT “What should I improve?”, I gave it the actual rubric first and said:

“Here’s my teacher’s grading rubric. Grade this essay using these exact criteria. Tell me my score for each category and what’s specifically missing to get full points.”

Here’s what happened:

ChatGPT’s response:

“Grading based on your teacher’s rubric:

Thesis statement addressing all aspects of prompt (20 points): 14/20 Your thesis mentions economic and social factors but doesn’t address political factors which the prompt specifically asks for. To get full 20 points, you need to explicitly mention all three aspects the prompt lists.

Three arguments with specific textual evidence (30 points): 18/30

- First argument: Good, uses direct quote from primary source document (full points)

- Second argument: You cite a quote but it’s from a secondary source. Your teacher’s rubric specifies PRIMARY source evidence. You need a document from the time period, not a historian’s analysis.

- Third argument: You explain the concept but don’t cite any textual evidence at all. Need at least one primary source citation here.

Analysis of historical/thematic significance (30 points): 15/30 You explain what happened and why, but you’re not connecting to the broader historical themes. Your teacher’s rubric specifically asks you to relate events to themes like “power dynamics” or “social change” – you need to explicitly name and analyze these themes.

Proper structure and citations (10 points): 9/10 Good structure. One citation missing page number.

Clear writing (10 points): 8/10 Clear overall but paragraph 3 has two run-on sentences.

Total: 64/100“

Holy shit.

THIS was completely different from before.

Not “add more evidence” – but “you need PRIMARY source evidence, not secondary sources.”

Not “deeper analysis” – but “connect to the specific themes your teacher listed: power dynamics and social change.”

Not “more specific thesis” – but “address the political factors the prompt asks for.”

Now I knew EXACTLY what my teacher wanted. Not what generic “good writing” looks like, but what THIS teacher grades on.

The Practice Loop That Actually Worked

I didn’t just read the feedback and move on. I used it to actually get better.

Round 1: First practice (64/100)

I knew what to fix. I wrote another practice essay implementing those exact improvements:

- Added political factors to thesis

- Replaced secondary source with primary source document

- Added primary source citation to third argument

- Explicitly connected events to “power dynamics” and “social change” themes

Asked ChatGPT to grade again with same rubric.

Round 2: Second practice (78/100)

Better! But ChatGPT pointed out new issues: my connection to themes was surface-level, just mentioning them without explaining how the events reflected those themes.

Revised again.

Rounds 3-5: Iterative improvement

Each round: write practice essay, get graded on rubric, see exactly what’s missing, implement those specific improvements.

By round 5: consistently scoring 90-95/100 on practice essays.

More importantly: I KNEW what my teacher wanted. I’d practiced hitting every rubric point multiple times.

The Real Finals

Finals day came.

I looked at the essay prompt. Recognized the pattern – I’d practiced this type of question five times.

I wrote my essay hitting every rubric point:

- Thesis addressing all aspects ✓

- Three arguments with primary source evidence ✓

- Analysis connecting to power dynamics and social change themes ✓

- Proper structure ✓

Turned it in feeling confident.

Not “hoping I did well” confident. But “I know I hit every criteria because I practiced it five times” confident.

Got my grade back: 92/100.

Almost exactly what my practice scores predicted.

For the first time, my practice actually matched my performance.

The Real Difference

Here’s what changed:

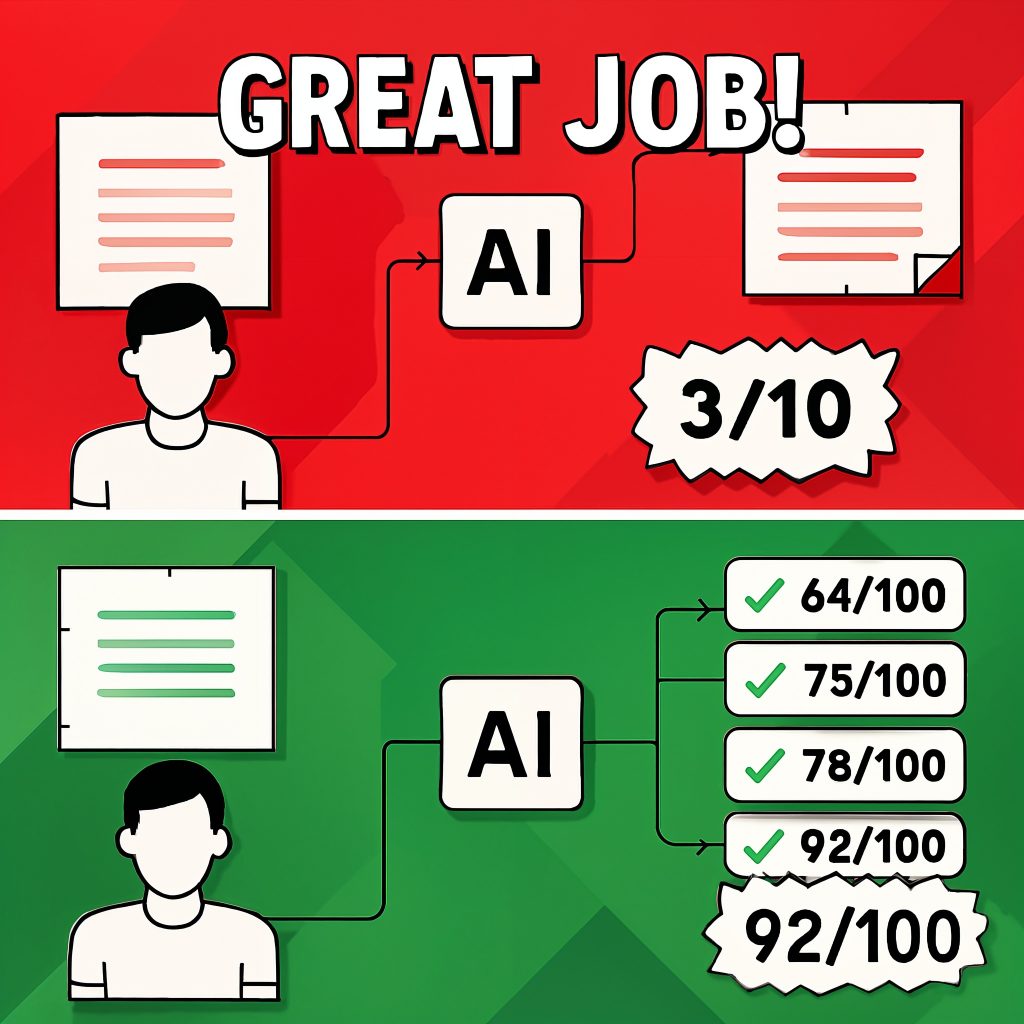

Before (generic AI feedback):

- AI: “Add more evidence, make analysis deeper, be more specific”

- Me: Adds more quotes, explains more, narrows focus

- My score: 3.5/10

- Problem: I’m improving what AI thinks is good, not what my teacher grades on

- Walked into tests hoping I’d practiced the right things

- Usually hadn’t

After (rubric-based AI feedback):

- AI: “You need PRIMARY source evidence, not secondary. Connect to these specific themes: power dynamics and social change. Address all three prompt aspects: economic, social, AND political.”

- Me: Makes those EXACT improvements

- Practice scores: 90-95/100

- Real test score: 92/100

- Gap between practice and reality: almost none

- Walked into test KNOWING I was ready

- Actually was

The difference was specificity. Not “be better” but “do THIS specific thing your teacher grades on.”

The Pattern Works for Everything

Once I realized this, I used it for every test where I had grading criteria:

Math finals: Teacher gave us the problem types and point distribution. I generated practice problems matching those exact types, had AI check my work against the grading method teacher showed us.

Practice scores: 85-90%. Real test: 88%.

History presentation: Got the rubric (content 40%, delivery 30%, visuals 20%, citations 10%). Practiced presentation, had AI score each category, improved weak areas.

Practice scores: 90+. Real presentation: A-.

Science lab reports: Teacher finally showed us the grading breakdown. Used it to practice, got feedback on exact criteria.

Practice scores matched real scores within 5 points every time.

Same principle: accurate feedback based on real criteria = accurate preparation.

Why I Built Something to Make This Easier

After figuring this out, I used rubric-based practice for every exam.

But here’s what I noticed: even knowing the method, I kept making mistakes.

Mistake 1: Forgetting to paste the rubric

I’d be tired, working on my 3rd practice essay, and just ask “How’s this?” without giving the rubric again.

AI would go back to generic feedback. I’d waste a practice round.

Mistake 2: Asking AI to improve it instead of telling me how

When frustrated, I’d slip: “Can you strengthen this second argument for me?”

AI would rewrite it. I’d copy it. I’d just cheated myself out of learning.

Mistake 3: Not tracking progress clearly

Practice 3 got 78/100. Practice 4 got 76/100. Wait, I got WORSE? Or did I just grade inconsistently? No idea.

Mistake 4: Giving up too early

One practice at 64/100, see all the improvements needed, feel overwhelmed, stop.

Didn’t realize I needed 4-5 rounds. Just thought “this isn’t working.”

The method worked. But executing it consistently was hard when tired or stressed.

That’s why I built the SuperPrompt.

What the SuperPrompt Actually Does

The SuperPrompt automates the rubric-based practice process so you don’t have to remember all the steps:

For this specific use case (exam prep with rubric):

- Locks in your rubric at the start – you can’t forget it

- Generates practice questions matching your test format

- Grades every practice using your exact rubric criteria

- Tracks your scores across rounds so you see improvement

- Stops you when you try to ask it to improve your work for you

- Tells you when you’re consistently ready (90+ on multiple practices)

It’s like having a coach who makes sure you execute the training plan correctly every time, even when you’re tired.

The rubric method works great when you HAVE a rubric. But what about:

- When your teacher won’t explain assignments

- When you’re completely lost on a concept and need to understand it before you can practice

- When you need to cram for an exam last minute with limited time

The SuperPrompt handles all of those situations too – it adapts to what you need.

Think of it like having a personal trainer at the gym:

- Spots you when you’re about to cheat (asking AI to do your work)

- Tracks your progress so you know you’re improving

- Adjusts the workout based on your specific situation

- Makes sure you’re doing the exercises correctly every time

- Tells you when you’re ready to test yourself for real

The complete guide (eBook) breaks down all these different study situations and shows you the framework behind each one – so you understand the full system, not just one technique.

If You Have a Rubric

If your teacher gives you a grading rubric for an upcoming test:

You now know what to do. Practice with the rubric. Get specific feedback on exact criteria. Improve what your teacher actually grades on.

The SuperPrompt makes sure you do it correctly and consistently – especially when you’re tired, stressed, or tempted to take shortcuts.

Works with ChatGPT, Claude, or whatever AI you use.

Ready to practice like you have a personal coach?

P.S. Next time your teacher gives you a rubric, make five practice tests with it. You’ll walk into that exam knowing exactly what you need to do – because you’ve already done it five times.

If you enjoyed this Blog Post, you might also enjoy my other Blogs on Meta Learning ( i.e. learning how to learn ) here: